I'm increasingly obsessed with my own fitness.

I've been closing my rings on my Apple Watch ever since I first put it on my wrist back in 2015.

Through the pandemic and working from home, Apple Fitness+ became a helpful motivator for getting me to try new workouts and experiment with yoga, meditation, heck even dance – all without leaving the house.

I don't feel overweight – I feel "just about right" – but as I shift into a new decade of my life, I'm increasingly concious of the fact that I won't be able to eat whatever I want, whenever I want, forever.

So I recently started using a great app, LoseIt! to help track the other half of my fitness – what I consume. While Apple Watch tracks my ability to lose calories and keep fit, LoseIt! tracks what I put in – helping me to balance that all important equation: "calories IN minus calories OUT = a negative number" if I want to lose weight.

I'm new to this calorie counting game – I never thought I'd be "one of those people" who asks how many calories are in a meal or in a cereal bar. I never thought I'd be someone who said no to a sweet treat. But increasingly – I am thinking twice about every snack I eat, and every portion of food I see on a plate.

Along with tracking the calories I'm consuming, I'm also trying to keep track of WHEN I eat food. I've been trying to obey a stricter schedule for when I wake, when I eat each meal, and when I get to sleep. According to some, WHEN you eat is just as important, if not more so than WHAT you eat.

I'm concious that when I eat, I am usually with people – friends, family, my partner, colleagues. I don't want to be sitting with a plate of delicious food poking at an app trying to add things to a calorie tracking app. Instead, I have found the least distracting, most effective solution is to simply snap a quick photo as subtley as I can of the meal I'm eating.

By snapping a photo I can grab an instant snapshot of the meal I had, along with the size, and the time I was about to eat it – which I can add to LoseIt! at a later date when I have more time to note down and clarify the details.

There are a few rumours circling that Apple may be bringing some form of food tracking functionality to iOS 15. This is something I am rather excited about – and if it's true, I can't wait to see how this works, and how accurate it will be. I'm sure Apple can find an innovative way to solve some of these complex problems.

I'm also excited to see what a company with the resources of Apple can do in a problem space that is so critically important to the health of every human on the planet: the food we consume. For so long, health in a digital context has been focused around the exercise we do, primarily because that's become a solved problem – and the other problems are harder.

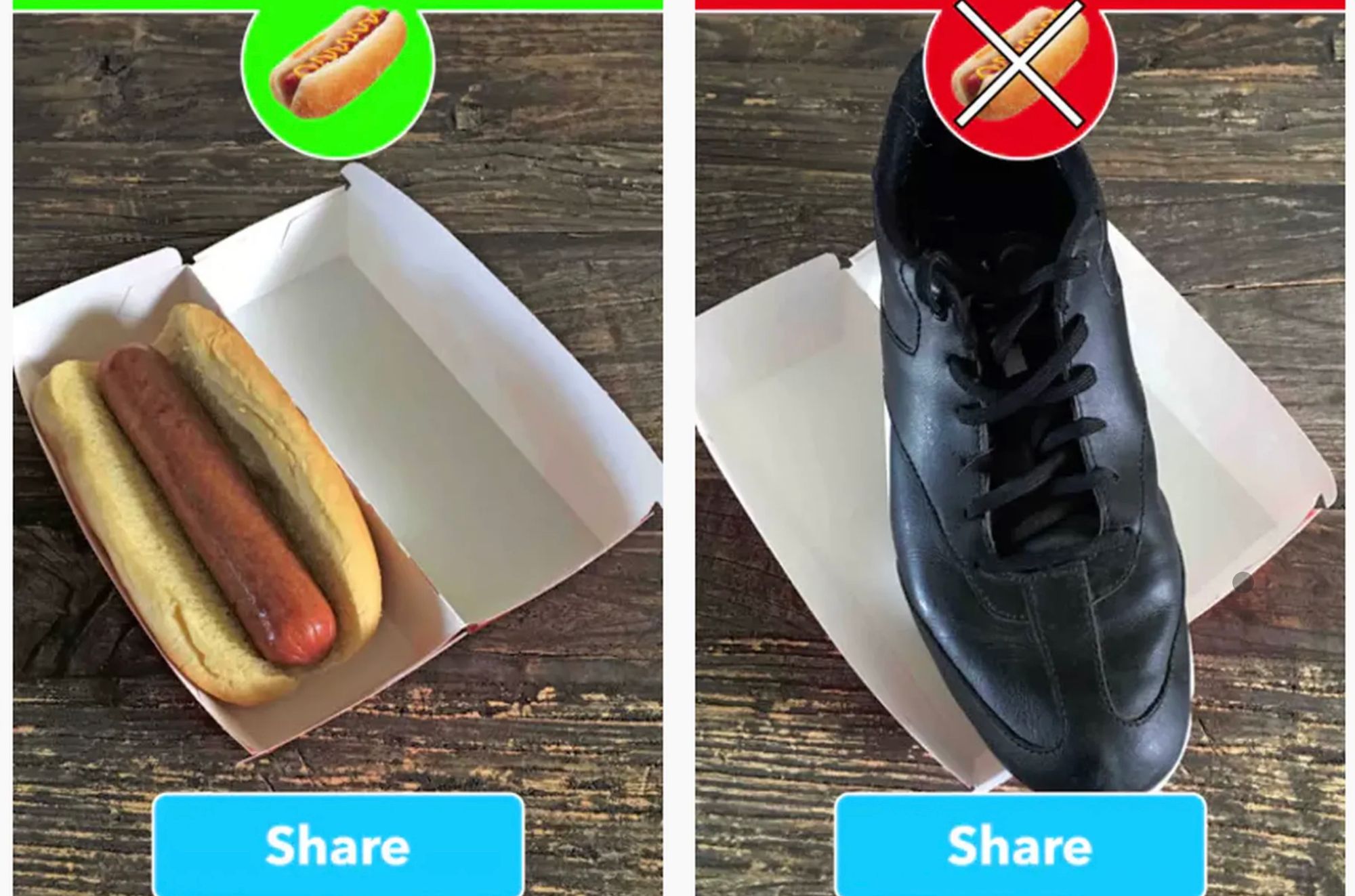

Perhaps to begin with, Apple Nutrition will do much the same as my "snap a photo and add it to LoseIt! later" approach. But my mind jumps to the future where Apple's AR glasses are real and being used in daily life – imagine picking up a cereal bar and being told it's the perfect food to eat right now. Or a warning sign next to the hot dog stand you're walking past pushing you to think twice before satisfying your cravings.

Can Apple make tracking and improving food consumption a first class experience in its health ecosystem? Will nutrition and food identifcation become the "killer app" of AR? Will any of this make me any less likely to eat a cinnamon bun with my coffee in the mornings? I can't wait to find out.